Small Models, Big Future

A couple of years ago, running capable language models locally seemed impractical. Now? I’m doing it daily, with predictable costs and complete privacy.

The AI world has been obsessed with making models bigger. GPT-4, Claude Opus, the latest Gemini - they’re all chasing the same dragon: more parameters, more compute, more everything. And sure, they’re impressive. They can write poetry, debug code, explain quantum physics. But there’s a catch: they’re also massive, slow, and expensive as hell.

Here’s my hot take: the future isn’t going to be dominated by these behemoths. The future is small language models (SLMs) doing the heavy lifting, with the big models playing very specific, specialized roles.

What even is a Small Language Model?

Let’s get this out of the way first. When I say “small”, I’m talking about models in the 3B to 12B parameter range. Compare that to GPT-4’s rumored trillion+ parameters, and you’ll see why I call them small.

But here’s the thing: small doesn’t mean stupid.

Models like SmolLM3-3B, Gemma-3-12B, or Llama-3.1-8B are punching way above their weight class. They can handle commonsense reasoning, follow instructions, call tools, generate code - basically everything you need for practical, day-to-day tasks. And they can do it on your laptop. Or that crusty old desktop sitting in the corner.

The Big Model Problem

Don’t get me wrong - I love Claude Opus as much as the next person. But let’s talk about what happens when you actually try to use these massive models in production:

- They’re slow. Every token generation feels like waiting for dial-up internet.

- They’re expensive. API costs scale linearly with usage, and those extended context windows multiply the cost per request.

- They require serious hardware. Want to run a model with a 200K context window locally? You’re looking at 80GB+ of VRAM. That’s multiple high-end GPUs.

- Privacy concerns. Your data is going to someone else’s servers. Every API call is a potential compliance nightmare.

- They’re overkill. Do you really need a trillion parameters to classify customer support tickets?

The dirty secret of the AI industry is that most tasks don’t need that much firepower. You’re essentially using a sledgehammer to hang a picture frame.

Why Small Models Actually Make Sense

Here’s where it gets interesting. SLMs are principally sufficiently powerful for the vast majority of agentic AI tasks. Let me break that down:

Speed and Efficiency

When you shrink a model down to something that fits on consumer hardware, magic happens. Inference becomes fast - like, really fast. We’re talking milliseconds instead of seconds. For agentic systems that need to make decisions repeatedly, this is a game-changer.

A 7B parameter model needs maybe 8-16GB of VRAM depending on quantization. That’s a consumer GPU - a 3060, 4060 Ti, or similar. Hardware that’s actually accessible. Compare that to the 80GB+ VRAM requirements for running large models with extended context windows, and the economics become crystal clear.

Privacy and Security

This is where things get really interesting. Local AI means your data never leaves your infrastructure. No API calls to external servers. No wondering if your sensitive data is being used to train the next model. No waiting for vendor security audits. It’s all local, all private, all under your control.

For enterprise use cases, this is huge. You can finally run AI on:

- Customer PII without violating GDPR

- Medical records without HIPAA nightmares

- Financial data without compliance teams having a meltdown

- Proprietary code without worrying about IP leakage

The compliance story alone is worth the switch for many companies. Being able to say “our AI never sends data to third parties” is a game-changer for regulated industries.

Economics and Cost Predictability

Let’s do some napkin math. Running a small model locally costs you… basically nothing after the initial setup. The hardware investment is one-time. A consumer GPU with 16GB VRAM will get you far with small models.

Running Claude Opus via API? That’s real money, every single request. And it scales linearly with usage. Worse, it’s unpredictable. You can’t easily forecast costs when they depend on user behavior, context window sizes, and token counts.

With local models:

- Fixed costs: You know exactly what you’re spending on hardware

- Zero marginal cost: Each inference costs you nothing

- No surprise bills: Your usage can spike 10x and your costs stay flat

At scale, the difference is astronomical. A company making millions of inference requests per day could be looking at hundreds of thousands in API costs. Or… a few thousand dollars in hardware that lasts for years.

The Hybrid Approach

Here’s where my premise really kicks in: you don’t need one giant model doing everything.

Think about it differently. You can have:

- Small models handling routine tasks: classification, simple Q&A, tool calling, code generation

- Large models as specialized experts: complex reasoning, creative writing, edge cases that need serious firepower

This is similar to how we already build distributed systems. You don’t run everything on one massive server. You split workloads, use the right tool for the right job, and optimize for your actual needs.

What This Looks Like in Practice

Imagine your AI assistant handling requests with a tiered approach:

- ~90% of requests hit a small, local model. Instant responses, zero marginal cost, complete privacy.

- ~9% of requests need slightly more power, so they hit a medium-sized model you run on your home server or a cheap cloud instance.

- ~1% of requests are genuinely complex and get routed to a frontier model like Claude or GPT-4.

You get the best of both worlds: the speed and efficiency of small models for everyday tasks, with the safety net of large models when you genuinely need them.

When to Escalate to Large Models

Not every task needs a trillion parameters. Here’s when you should route to larger models:

- Complex multi-step reasoning requiring 5+ logical dependencies

- Novel creative writing where originality matters more than speed

- Sophisticated code architecture decisions (small models excel at generating code, but architecting new systems may need more reasoning power)

- Ambiguous edge cases where context and nuance are critical

- Domain expertise in specialized fields like advanced mathematics or niche technical domains

For everything else? Small models handle it just fine.

Making Small Models Smarter

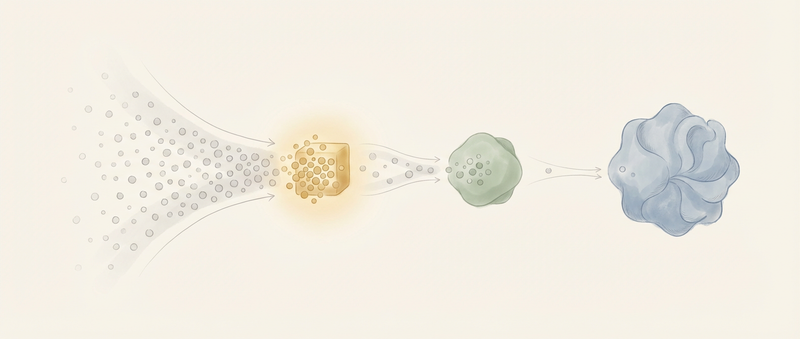

One of the coolest developments is knowledge distillation - basically teaching a small “student” model from a larger “teacher” model. You take something like DeepSeek-R1 (a reasoning-focused large model), extract its reasoning patterns, and use that to train a much smaller model.

The results? A 7 billion parameter model that performs like a 70 billion parameter model on specific tasks. It’s not magic - it’s just smart engineering.

But capability alone isn’t enough. For production use, you need reliability.

Building Reliable Systems

Here’s something nobody talks about enough: small models are easier to control.

Large models are kind of like really smart but occasionally unpredictable employees. They might go off-script, hallucinate facts, or interpret your instructions creatively. With small models, you can be more prescriptive and build more reliable systems.

Constrained Decoding

New techniques like DOMINO and Formatron let you guarantee that model outputs follow strict formats - JSON schemas, regex patterns, whatever you need. And they do it with virtually no performance overhead.

Why does this matter? Because in production systems, reliability beats creativity. If you need a model to output valid JSON for your API, you can’t afford to have it occasionally decide to write poetry instead.

Moving Logic Out of Prompts

With large models, we often stuff complex logic into prompts because the model is smart enough to figure it out. With small models, you flip this: move the complex logic to external code and use the model for what it’s actually good at.

This is actually better engineering. Your logic is in code (testable, debuggable, version-controllable) instead of buried in prompt strings. Your model does the language understanding part. Clean separation of concerns.

Why This Matters

We’re at an inflection point. The industry spent years racing to make models bigger, but we’re hitting diminishing returns. The next frontier isn’t scale - it’s efficiency, specialization, and smart architecture.

Small models democratize AI. They’re accessible to people without data centers. They enable privacy-preserving applications. They make AI economically practical.

And they change how we think about building with AI. Instead of throwing a trillion parameters at every problem, you start asking: What does this task actually need? How can I structure it better? Should this logic live in the model or in code?

Getting Started

If you want to experiment with this yourself:

- Check out llama.cpp and llama-server for running models locally

- Try models like SmolLM3-3B (great all-rounder with 128K context), Gemma-3-12B (excellent multimodal support), or Llama-3.1-8B (solid general-purpose model)

- Experiment with constrained decoding libraries like DOMINO and Formatron

- Use tools like llama-swap to switch between multiple models without reloading everything from scratch

The barrier to entry has never been lower. You can download a capable model and run it on your laptop in about 10 minutes.

Quantization: Making the Most of Limited Hardware

Here’s the trick that makes running models on consumer hardware practical: quantization. Instead of storing model weights at full precision (16-bit or 32-bit floating point), you reduce them to lower precision. This dramatically shrinks memory requirements.

A 7B parameter model at full precision needs around 14GB of VRAM. Quantize it to 4-bit, and suddenly it fits in 4-5GB. That’s the difference between “I need a $2000 GPU” and “my laptop can handle this.”

There are two main approaches:

Native quantization is when models are trained or post-trained with quantization built in. GPT-OSS-20B is a perfect example - it ships natively in MXFP4 format (a 4-bit precision format from OpenAI). There’s no full-precision version. This approach generally preserves quality better because the model learns to work within the precision constraints.

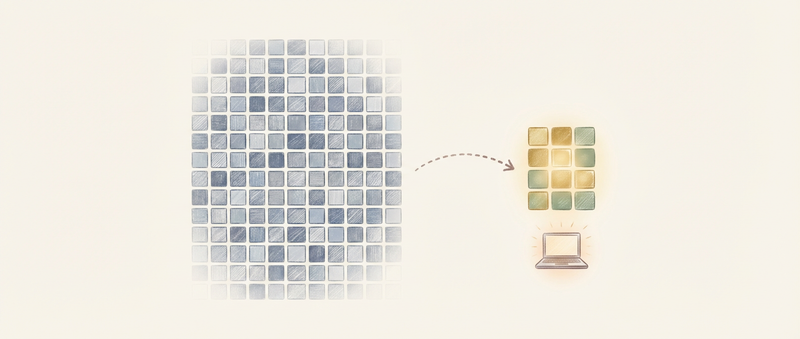

Post-training quantization (PTQ) is when you take a full-precision model and convert it to lower precision after training. GGUF is the dominant format here, offering different quantization levels:

- Q8_0: 8-bit - very close to full precision quality

- Q6_K: 6-bit - balanced quality and size

- Q5_K_M: 5-bit - sweet spot for many use cases, minimal quality impact

- Q4_K_M: 4-bit - aggressive compression, noticeable but acceptable quality loss for many tasks

- Q3_K_M and below: 3-bit and lower - significant quality degradation, use with caution

The quality tradeoff is real. Research shows that down to around 5 bits per weight, quality loss is minimal. Below that, you start seeing degradation in benchmark performance. But here’s the thing: for many practical tasks, a well-quantized Q4 model is still useful. You’re trading some capability for the ability to run locally at all.

Choose your quantization level based on your hardware and quality requirements. If you have 16GB VRAM, go with Q6 or Q8. Limited to 8GB? Q4 or Q5 is your friend.

Long Context: RoPE and YARN

One of the coolest developments in small models is getting massive context windows on consumer hardware. Remember when I mentioned SmolLM3 with a 128K context? That’s possible because of techniques like RoPE and YARN.

RoPE (Rotary Position Embeddings) is how modern transformers understand where tokens are in a sequence. Instead of adding position information to embeddings like older methods, RoPE rotates feature pairs based on token position. Think of it like encoding position as angles in 2D space - the model can naturally understand relative distances between tokens by looking at the rotation angles. It’s elegant, parameter-free, and scales well. Most modern models (Llama, Qwen, etc.) use RoPE.

But there’s a catch: models trained with RoPE typically can’t handle contexts longer than what they saw during training. Train on 4K context, and the model falls apart at 8K.

YARN (Yet Another RoPE extensioN method) solves this. Instead of treating all RoPE dimensions uniformly, YARN uses a clever scaling approach that divides dimensions into groups and scales them differently. High-frequency dimensions (which encode fine-grained positional info) get minimal scaling. Low-frequency dimensions (which encode broader positional patterns) get more aggressive scaling. The result? You can extend a model’s context window to 8x, 16x, or even 32x its training length with minimal fine-tuning - we’re talking less than 0.1% of the original training data.

This is why you can download a 7B model and run it with a 128K context window on your laptop. YARN makes it practical. The combination of quantization (reducing memory per parameter) and YARN (efficiently handling long contexts) is what makes small models genuinely useful for real work.

KV Cache Quantization: The Other Memory Problem

Here’s something most people don’t realize about long context windows: the model weights aren’t the only memory bottleneck. The KV cache (key-value cache) stores attention information for every token you’ve processed. At long contexts, this cache can consume as much VRAM as the model itself.

During inference, transformers cache the keys and values computed for previous tokens to avoid recalculating them. Sounds efficient, right? It is - until you look at the memory cost. For modern 7-8B models with Grouped Query Attention (like Llama 3.1 8B), a 128K context needs about 16GB just for the KV cache at FP16 precision. Older architectures without GQA? That jumps to 60GB+ for the same context length.

KV cache quantization solves this by storing the cache at lower precision:

- Q8 (INT8): Nearly lossless quality, cuts KV cache memory in half (~8GB for modern 7-8B models at 128K)

- Q4 (INT4): Acceptable quality loss for most tasks, reduces to 1/4 the size (~4GB)

- Q2 and below: Aggressive techniques like SKVQ can go down to 2-bit keys and 1.5-bit values for truly extreme contexts

In llama.cpp, you can enable KV cache quantization with a simple flag. The quality impact is minimal - perplexity increases by less than 0.01 at Q8, and Q4 is still quite usable for most practical applications.

The real-world impact? A modern 7-8B model with Q4 model weights (~4GB) and Q4 KV cache (~4GB) can handle 128K context in around 8-10GB of VRAM total. That’s solidly in consumer GPU territory - a 3060 12GB or 4060 Ti 16GB handles it comfortably.

This is the full picture: YARN extends the context window, model quantization reduces parameter memory, and KV cache quantization handles the attention cache. Together, these techniques make long-context small models actually viable on consumer hardware.

Choosing the Right Model

Not all small models are created equal. When evaluating models, test them on your actual use cases. Some models suffer from over-alignment - they’re so locked down they’ll flag regular operations as “out of line” and refuse to continue. GPT-OSS-20B is a perfect example: it’s a capable model, but it’s been locked down so aggressively that it’s trigger-happy about flagging benign operations. This is the flip side of safety measures: sometimes they’re too aggressive for practical use. A model that constantly refuses benign requests isn’t useful, no matter how capable it is on paper.

Wrapping Up

I’m not saying large models are going away. GPT-5 or Claude Opus 4 or whatever comes next will probably blow our minds. But I think we’re going to see a shift in how we use them.

The future is hybrid. The future is efficient. The future is small models handling most of the work, with large models as the specialists you call in when you really need them.

And honestly? I’m pretty excited about it. It’s nice to see AI becoming more accessible, more practical, and more grounded in engineering principles instead of just throwing more compute at problems.

Now if you’ll excuse me, I have SmolLM3 running locally with a 128K context window. Because I can. And that’s pretty cool.

References

If you want to dive deeper into the research behind all this, here are the key papers:

- Small Language Models for Agentic AI: Belcak et al., “Small Language Models are the Future of Agentic AI”, arXiv 2506.02153 (2025)

- Tiny Recursive Models: Jolicoeur-Martineau, “Less is More: Recursive Reasoning with Tiny Networks”, arXiv 2510.04871 (2025)

- YARN Context Extension: Peng et al., “YaRN: Efficient Context Window Extension of Large Language Models”, arXiv 2309.00071 (2023)

- Constrained Decoding (DOMINO): Willard & Louf, “Guiding LLMs The Right Way: Fast, Non-Invasive Constrained Generation”, arXiv 2403.06988 (2024)

- Formatron: “Earley-Driven Dynamic Pruning for Efficient Structured Decoding”, arXiv 2506.01151 (2025)

- KV Cache Quantization: Hooper et al., “KVQuant: Towards 10 Million Context Length LLM Inference with KV Cache Quantization”, arXiv 2401.18079 (2024)

The space is moving fast, and there’s some genuinely fascinating work happening.